IoT AI with Ioto

Artificial Intelligence (AI) significantly enhances edge devices by enabling more intelligent, autonomous operations. The recent advances in large language models (LLMs) running in the cloud are leading to transformative applications in the IoT space.

Developers typically select from three principal AI integration patterns: on-device models, cloud-based models, and hybrid models.

On-device language models operate entirely within the local hardware environment. This approach offers data privacy, reduced latency, and consistent operation regardless of network conditions, making it ideal for real-time applications or devices with intermittent connectivity or stringent privacy requirements. However, the complexity and scale of these models are constrained by the limited computational resources of edge devices.

Cloud-based language models offload computationally intensive processing to cloud servers, enabling the use of robust, large-scale LLMs that surpass the resource capabilities of edge devices. This design provides advanced features, seamless scalability, and simplified updates. Nevertheless, it relies on continuous internet connectivity and may introduce latency.

Hybrid approaches combine on-device models with cloud-based models. In this pattern, tasks that are privacy-sensitive or critical are executed locally, while more complex, resource-intensive operations that are not time-sensitive are processed in the cloud. This approach effectively blends the strengths of privacy and responsiveness with the new capabilities offered by the latest generation of cloud-based models.

Ioto IoT AI

Ioto provides an intuitive AI library that simplifies interactions with cloud-based LLMs, facilitating tasks such as data classification, sensor data interpretation, information extraction, and logical reasoning. This capability is particularly beneficial for applications in predictive maintenance, smart agriculture, healthcare, smart homes, and environmental monitoring. It’s ideal for analyzing non-real-time sensor data using the power of an LLM.

The Ioto AI library can invoke cloud-based LLMs and run local agents that operate with full access to device context.

OpenAI and Foundation Models

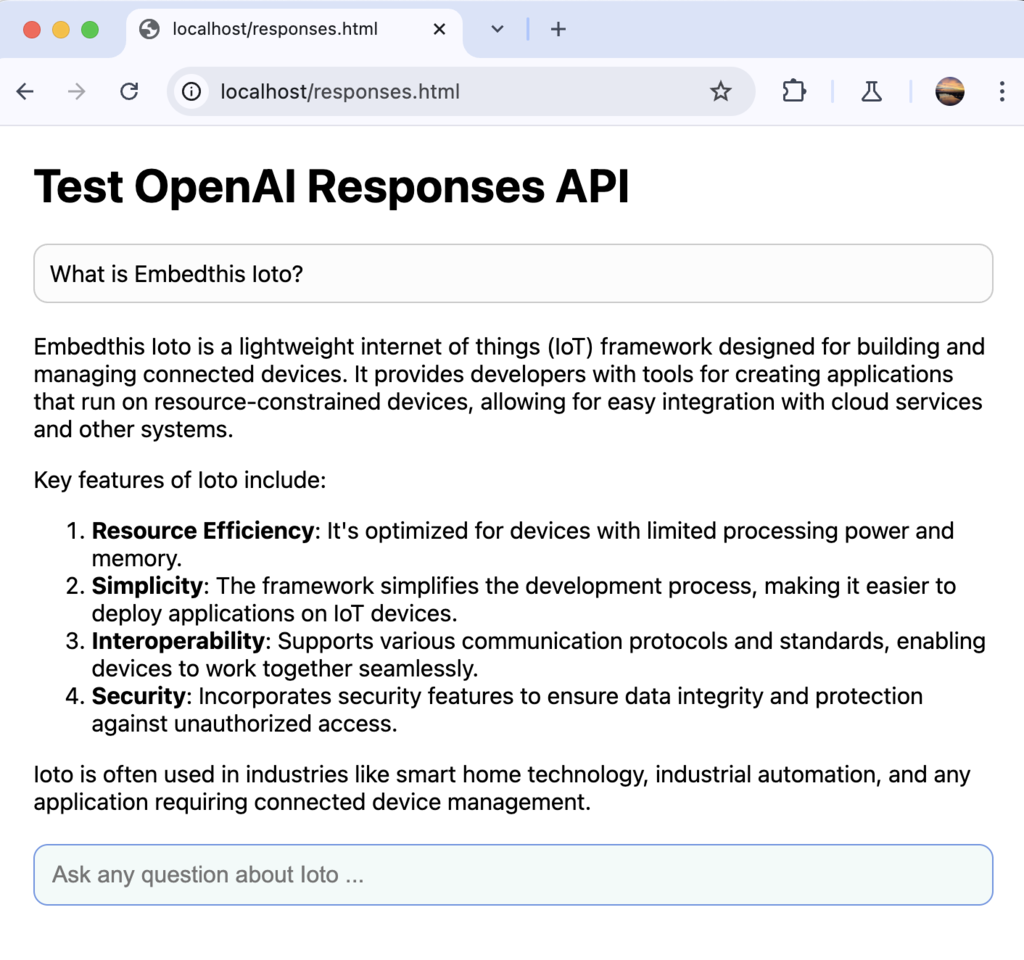

Ioto supports the standard Chat Completions API and also implements the newer OpenAI Response API which is designed to help developers create advanced AI agents and workflows capable of performing cloud-based tasks like web searches, file retrievals, and invoking local agents and tools.

The standard OpenAI Chat Completions API is supported by most other foundation models. We expect other vendors to follow OpenAI’s lead and add support for the Response API to their offerings.

Getting Started with Ioto AI

The Ioto distribution includes an ai sample app that demonstrates the AI facilities of Ioto.

Before building, you need an OpenAI account and an API key. You can get an API key from the OpenAI API Keys page.

Once you have an API key, you can edit the apps/ai/config/ioto.json5 file to define your access key and preferred model. Alternatively, you can provide your OpenAI key via the OPENAI_API_KEY environment variable.

ai: {

enable: true,

provider: "openai",

model: "gpt-4o",

endpoint: "https://api.openai.com/v1",

key: "sk-proj-xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"

},

services: {

ai: true,

...

},

The services.ai controls whether the AI service is compiled, while the ai.enable setting enables or disables it at runtime.

If you are using another foundation LLM other than OpenAI, you can define the API endpoint for that service via the endpoint property.

Building Ioto and the AI Sample App

When you build the Ioto Agent, you can select the ai sample app to play with the AI capabilities. This will enable the Ioto AI service.

make APP=ai

AI Sample App

The AI sample app has five web pages that are used to initiate different tests:

| App | Page | Use Case | API Case |

|---|---|---|---|

| Chat | chat.html | ChatBot | Use the OpenAI Chat Completions API |

| Chat | responses.html | ChatBot | Use the new OpenAI Response API |

| Chat | stream.html | ChatBot | Use the new OpenAI Response API with streaming |

| Chat | realtime.html | ChatBot | Use the OpenAI Chat Real-Time API |

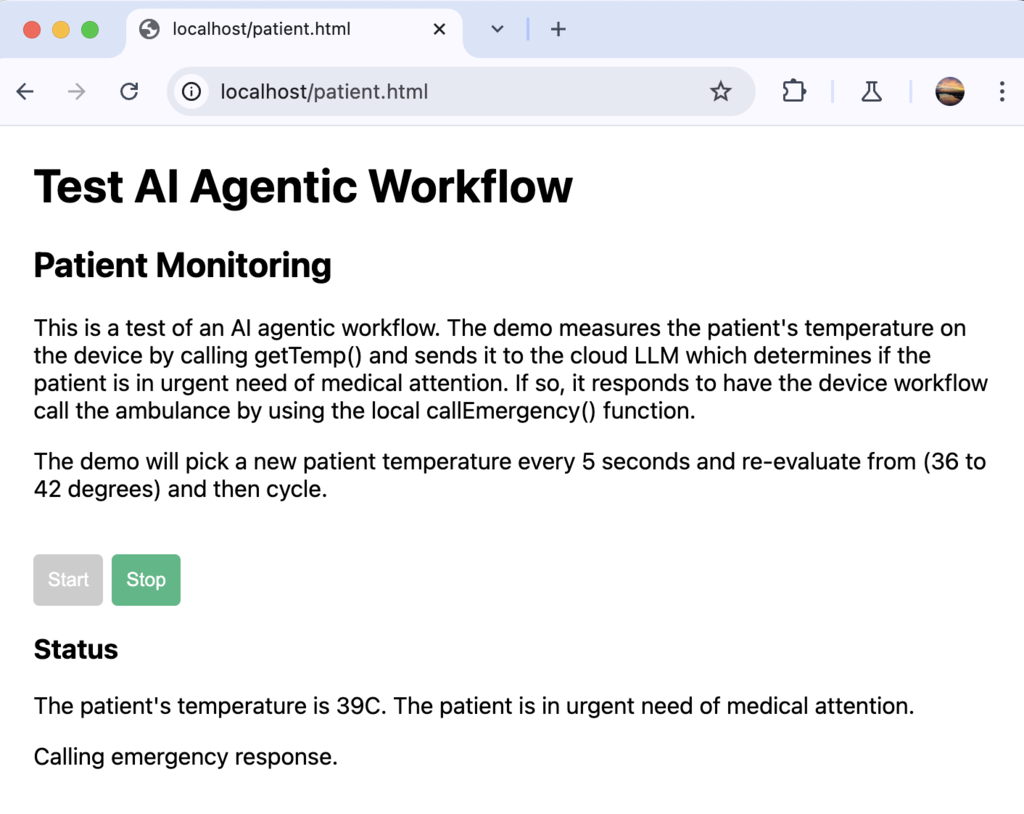

| Patient | patient.html | Patient Monitoring | Use the new OpenAI Response API and invoke sub-agents |

The Patient app demonstrates a patient monitor using an AI agentic workflow. The app measures a patient’s temperature locally by calling the getTemp() function. It sends the temperature to the cloud LLM which determines if the patient is in urgent need of medical attention. If so, it responds to instruct the device workflow to call the ambulance by using the local callEmergency() function. The web page has two buttons to start and stop the monitoring process. This app demonstrates the use of the OpenAI Response API and local agent functions.

The Chat demo is similar to the consumer ChatGPT website. Each web page is a simple ChatBot that issues requests via the Ioto local web server to the relevant OpenAI API. The requests are relayed to the OpenAI service and the responses are passed back to the web page to display.

The Chat demo is similar to the consumer ChatGPT website. Each web page is a simple ChatBot that issues requests via the Ioto local web server to the relevant OpenAI API. The requests are relayed to the OpenAI service and the responses are passed back to the web page to display.

The sample apps register web request action handlers in the aiApp.c file. These handlers respond to the web requests and in turn issue API calls to the OpenAI service. Responses are then passed back to the web page to display.

Note: The AI App does not require cloud-based management to be enabled.

Code Example

Here is an example calling the Responses API to ask a simple question. This example uses file search (aka RAG) to augment the pre-trained knowledge of the LLM and a local function to get the local weather temperature.

#include "ioto.h"

void example(void)

{

cchar *vectorId = "PUT_YOUR_VECTOR_ID_HERE";

char buf[1024];

/*

SDEF is used to concatenate literal strings into a single string.

SFMT is used to format strings with variables.

jsonParse converts the string into a JSON object.

*/

Json *request = jsonParse(SFMT(buf, SDEF({

model: 'gpt-4o-mini',

input: 'What is the capital of the moon?',

tools: [{

type: 'file_search',

vector_store_ids: ['%s'],

}, {

type: 'function',

name: 'getWeatherTemperature',

description: 'Get the local weather temperature',

}],

}), vectorId), 0);

Json *response = openaiResponses(request, agentCallback, 0);

// Extract the LLM response text from the json payload

text = jsonGet(response, "output_text", 0);

printf("Response: %s\n", text);

jsonFree(request);

jsonFree(response);

}

The openaiResponses API takes a JSON object that represents the OpenAI Responses API parameters. The SDEF macro is a convenience to make it easier to define JSON objects in C code. The SFMT macro expands printf-style expressions. The jsonParse API parses the supplied string and returns an Ioto Json object which is passed to the openaiResponses API.

The response returned by openaiResponses is a JSON object that can be queried using the Ioto JSON library jsonGet API. The output_text field contains the complete response output text.

The agentCallback function is triggered when the LLM needs to invoke a local tool. It is defined as follows:

static char *agentCallback(cchar *name, Json *request, Json *response, void *arg)

{

if (smatch(name, "getWeatherTemperature")) {

return getTemp();

}

return sclone("Unknown function, cannot comply with request.");

}

Consult the Responses API for parameter details.

Want More?

See the apps/src/ai app included in the Ioto Agent source download for the example responses.html web page that uses the Responses API and the patient.html web page that uses the OpenAI Response API and local agent functions.

Here is the documentation for the Ioto AI APIs:

Consult the OpenAI documentation for API details:

Reach out if you have any questions or feedback by posting a comment below or contacting us at sales@embedthis.com.

Wrapping Up

Ioto makes it easy to integrate powerful AI capabilities into your IoT devices—without the complexity. Whether you’re building a smart appliance, medical sensor, or environmental monitor, Ioto’s flexible architecture and built-in AI tools help you move faster.

Download the Ioto Agent, try out the sample apps, and start building smarter devices today.

{{comment.name}} said ...

{{comment.message}}